Does it make you feel more alive – A personal framework for approximatively evaluating ethics of technology as a user as well as technologist

I subscribe to the notion that ethics is entirely rational and maps to game theory. Ethics is about the principled resolution of conflicts of interest between autonomous agents under conditions of shared purpose when playing long games. [1] With this in mind, it is crucial to model incentives and their consequences for systems to evaluate ethics. On the most general level, we can take the goal of avoiding suffering as the shared purpose of all humans on earth. Additionally, maintaining the global civilization might be another high-level goal. Technologists with the ability to create and change technological systems should do so ethically by considering these goals and projecting the impact of the system they create into the distant future. Making this difficult, the complexity of networks of human and non-human entities interacting through technology on a global scale has become overwhelming. Additionally, technology changes at such a fast pace that national and global elites cannot anticipate its full impact anymore, leading to increasing randomness in societies trajectories without anyone being able to formulate and implement a coherent plan projecting them into the future. Therefore it is even more critical that technologists evaluate the ethics and impacts of their actions, especially given the enormous leverage that computer technology offers.

A field that does evaluate the consequences of actions and systems and their incentives in a computational fashion is game theory, which considers the global and local outcomes, given that all actors act only on their local incentives. It allows analyzing games and creating rules for games such that desired outcomes are achieved. Climate change is a simple example of game theory and its relationship to ethics: Given a set of players, they can play two actions: polluting or not polluting. However, given that polluting is cheaper than not polluting, the optimal strategy for every player is to pollute. The problem is the incentive structure. Thus the concept of carbon footprint and shifting the responsibility of climate change to the individual is unethical since the incentives will be set up for everyone to keep polluting. Instead, a global entity needs to update the payout matrix of the players in such a way that the updated costs lead to behavior that optimizes for the global environmental costs.

Thinking through the consequences of technology systems on these goals in a long game is cumbersome. Therefore I propose a simplified framework to approximate the ethics of technological systems locally. It is based on the observation that a lot of the dark side of deploying computer technologies seems to be about it being used to control human behavior by offering short-term and shallow fulfillment of human needs and desires. Often technology is used to control humans, treating them more like machines instead of helping them to be more human. As a user, you could ask yourself: “Does this in the long term help me feel more alive and human?”. As a technologist, you could ask yourself: “Does this in the long term help users feel more alive and human?”. While this question is quite open for interpretation, I propose referring to the five hindrances mentioned in Buddhistic scripture that are said to hinder progress in meditation and daily life. Technology that does increase the strength of the five hindrances ([2]) in its users could be said to contribute to them feeling less alive and human in the long term:

- Sensory desire: seeking for pleasure through the five senses of sight, sound, smell, taste and physical feeling.

- Ill-will: feelings of hostility, resentment, hatred and bitterness.

- Sloth-and-torpor: half-hearted action with little or no effort or concentration.

- Restlessness-and-worry: the inability to calm the mind and focus one’s energy.

- Doubt: lack of conviction or trust in one’s abilities.

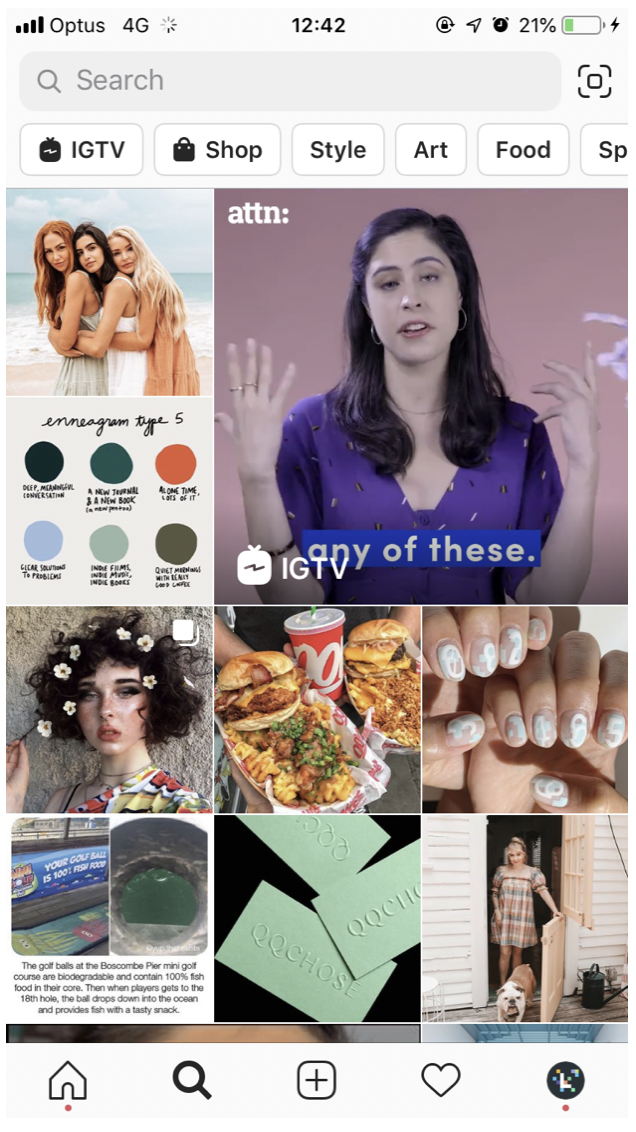

Let us apply this framework to a few examples from the user side. As a first example, let us look at some features of the Instagram iOS app. When I want to search for something, I have to open the search screen (see image below), which offers a small search bar at the top and the rest of the screen filled with images and videos curated by an automatic recommender system. Does this usage of technology make me feel more alive and human? No, it mostly does not. First of all, this unwanted recommendation directly subverts my intent of wanting to search for something, which already is undermining me exercising my will. Second, to a large degree, the recommended content is superficial posts that are optimized for engagement. A picture of a beautiful woman with an inspiring caption will not boost my mood in the long term and is just a technological knock-off version of a physical world one-to-one human interaction that inspires me. Also, the asymmetry of the following concept makes for a less human experience compared to the real world. Since the recommended content is mostly by accounts with a larger following which optimize for engagement. Interacting with that account will entail receiving less attention than I put in due to the asymmetric situation when users with large followings interact with their followers. This example shows that the app contributes to increasing hindrances one, three, and four.

The Instagram iOS search screen.

Another example is video calling, which is part of the Instagram app and many other apps. Video calling facilitates a symmetric, real-time communication channel between two or more users. Even though this mode of communication lacks the elements of touch, smell, body language, and high-resolution of physical world interactions, it makes up for this by keeping human interaction possible over large distances. Additionally, to hearing the voice with all its emotional subtleties, I can see the other person and their facial expressions approximating a physical world human interaction. Keeping in touch with friends I value and otherwise would only get to interact within the physical world a couple of times a year makes me feel more human and alive.

Let us look at 3D printing, a technology that allows volumetric printing of shapes from digital 3D models. This allows processing digital intangible 3D models into physical world objects that I can touch and use. Overall this makes me feel more human and alive. I can have an idea or look at someone else’s idea for a 3D model and then print it out and get a sense of the idea with much more senses than the current restriction to a 2D view on a screen.

There are technologies for which this framework is not sufficient for evaluation since they are too abstract and general to be evaluated personally. For example, Javascript can be used to create interactive Web apps that engage more and help me understand a machine learning algorithm by displaying a visual model I can step through, which feels livelier and more human than reading from a static book. Then also, Javascript can be used to surveil and record my usage of the Facebook site, which is used to maximize engagement leading to an inevitable race to the bottom of the brain stem. [3] This ultimately engages me short-term, but long-term glues my attention to the screen, making me feel less alive and human.