SpeculativeAI Series

Audiovisual Neural Network Experiments

Overview

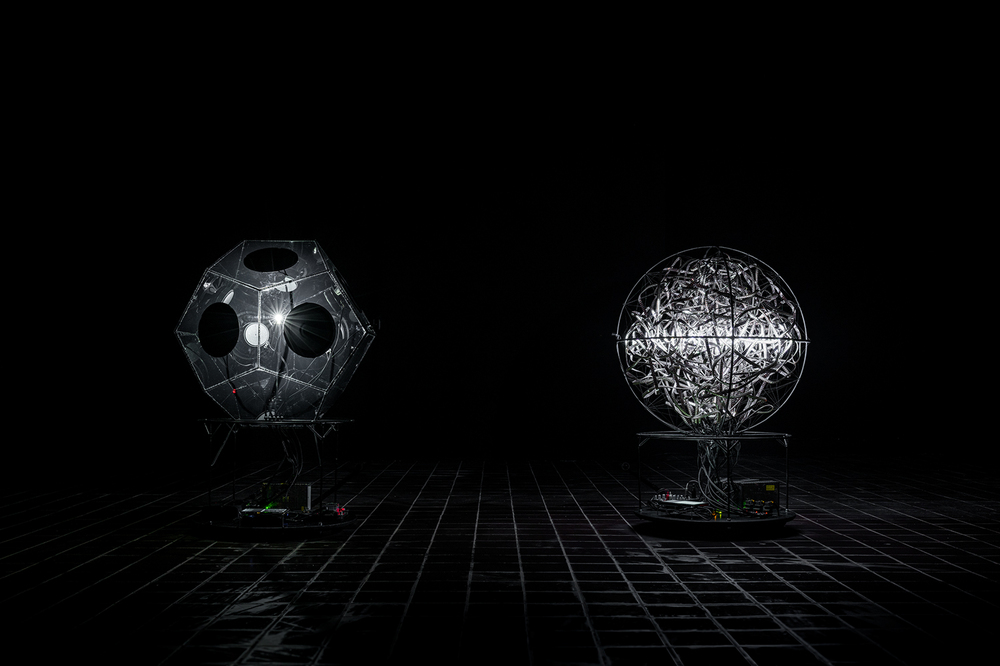

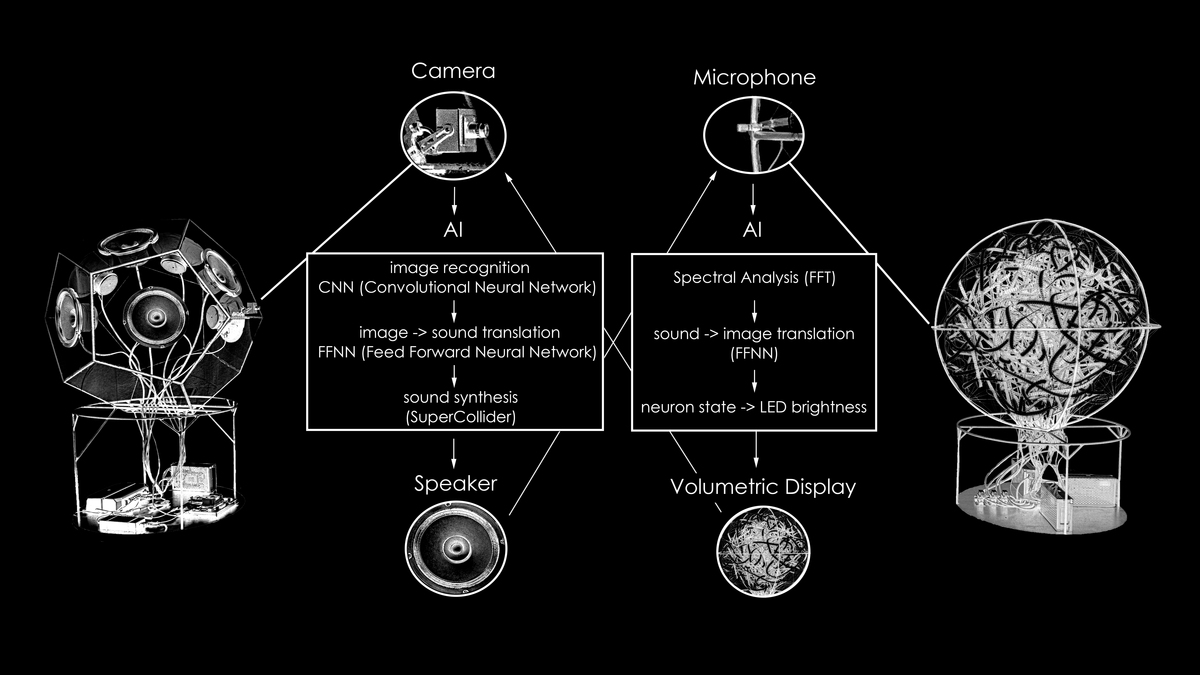

The SpeculativeAI series consists of aesthetic experiments aimed at making the processes of artificial neural networks perceptible to visitors through audiovisual translation. The latest work questions an AI’s capacity for empathy and purpose while communicating with another AI. Conceptually supported by the Center for Artificial Intelligence (University of Oviedo), two independent AI systems were set up to communicate using an invented language of audiovisual associations.

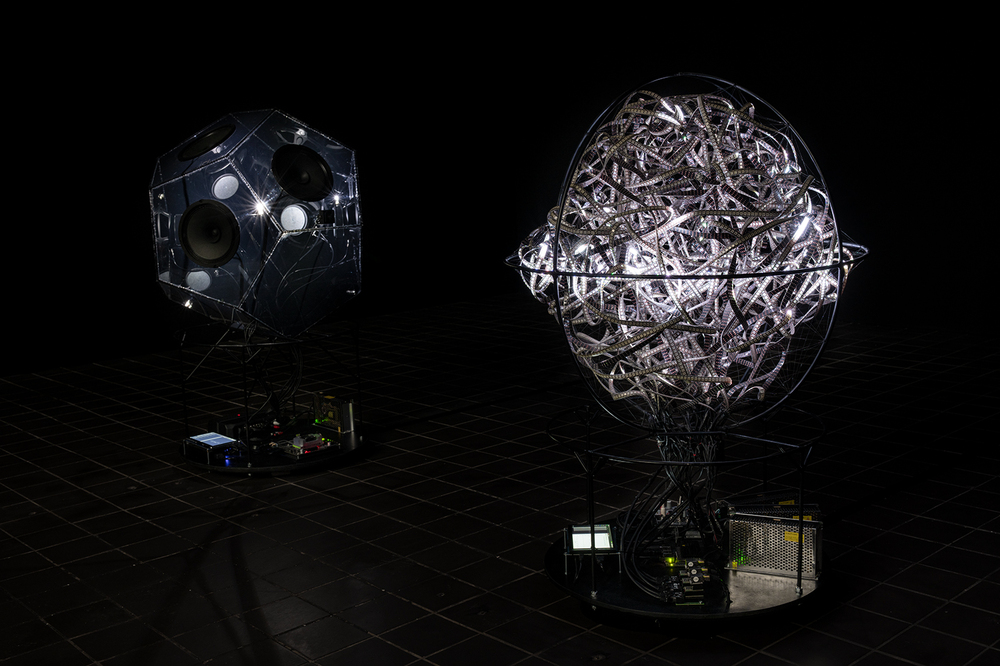

- The light object is a spherical structure with an 80cm diameter, composed of a chaotic heap of 120m LED stripe, a microphone, and an embedded AI computing device (Jetson Nano). It hears sounds and creates images.

- The sound object, a dodecahedron made of black opaque Plexiglas with the same diameter, features eight speakers, a camera, and another AI system. It sees images and plays sounds using a Jetson Nano as well.

Sound-to-Visual Translation (GitHub) | Visual-to-Sound Translation (GitHub) | Benjamin Schmithüsen Project Page

Technical Details

- I developed the entire ML system for two Jetson Nano devices, including fallback an reliability mechanisms:

- Sound-to-Visual: Translates microphone input via Fast Fourier Transform and a neural net to control 14,000 LEDs on 120 meters of LED stripe, 3D-mapped in space.

- Visual-to-Sound: Processes camera video streams into a 512-dimensional vector, reduced to 5 dimensions via PCA, then fed into the SuperCollider synthesizer via OSC.

- Trained a dictionary between both models to enable conversations between the systems.

Gallery